A few days ago I found myself in the lobby of my car repair shop waiting for our vehicle's inspection to be completed. The nightly news was blaring from a TV, and there was a woman in tears on the screen. She had either missed or ignored Shutterfly's recent storage policy change, that read in part:

In January 2023, Shutterfly’s photo storage policy was updated, offering unlimited photo storage to active customers who make a purchase every 18 months. As part of the policy update, any photos stored in a customer’s inactive account would be permanently removed from our servers.

She was devastated because she had lost decades worth of photos because she had neglected to keep her account active.

As sympathetic as was I was to the woman on TV, I have to admit I had a moment of mental superiority. I couldn't help but think: Hah! That's what you get when you trust an unreliable platform to store your data. I store my images safely in Google Photos, so I'm good.

It took about 30 seconds for me to see the flaw in my thinking. The woman's sin wasn't merely relying on an untrusted storage partner; it was that she opted to store her data in a single location. From that perspective, I was just as guilty as her. While it's unlikely that Google Photos will disappear any time soon, it's still not ideal to have precious data housed without a backup.

Backing up a Lifetime of Photos

This got me thinking: how could I export the 25,000 or so pics I have in Google Photos to Amazon's S3 platform? S3 is relatively cheap and, most importantly, isolated from Google's hardware and policies.

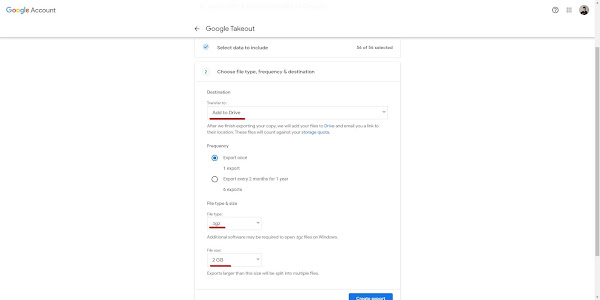

Google solves a big part of problem with their Takeout Service. Takeout effortlessly bundles your personal data stored on Google into .zip or .tgz files that you can access with ease.

Exporting my Photo library to a series of .tgz files that are accessible in Google Drive took just a few clicks.

Once I had the 58(!) export files, each one weighing in at 50 gig, how was I supposed to pull them off of Google and push them to S3?

The solution I arrived at is found in takeoutassist. This shell script leverages the command line tools gdrive and aws, to pull files from Drive and push them to S3.

The script assumes that any top level folder in Google Drive named Takeout was generated by Google Takeout. One at a time, it downloads a file from this directory, uploads it to an S3 bucket, verifies the upload was successful and then deletes the file from Google Drive.

The process looks like this and is slow as molasses:

$ takeoutassist -a archive -p benjisimon@gmail.com -t 2023-05-02 Downloading takeout-20230502T052704Z-055.tgz Successfully downloaded takeout-20230502T052704Z-055.tgz move: .takeoutassist/holding/takeout-20230502T052704Z-055.tgz to s3://archive-benjisimon-com/takeout/benjisimon_gmail.com/2023-05-02/takeout-20230502T052704Z-055.tgz Deleted 'takeout-20230502T052704Z-055.tgz' Downloading takeout-20230502T052704Z-057.tgz ...

Thankfully the process is automatic, so I can kick it off and forget about it.

In another few months I'll put in another Takeout request to grab a fresh backup. At that point, I'll add a feature to takeoutassist to remove the old Takeout data from S3 to avoid paying for multiple copies of my backed up data.

I feel for the woman on the news, but at least I could put her pain to use as motivation to avoid her fate.

No comments:

Post a Comment